I have a Pandas DataFrame that looks like this:

df = pd.DataFrame({'col1': [1, 2, 3],

'col2': [4, 5, 6],

'col3': [7, 8, 9]})

df

col1 col2 col3

0 1 4 7

1 2 5 8

2 3 6 9

I would like to create a Pandas DataFrame like this:

df_new

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

Is there built-in or combination of built-in Pandas methods that can achieve this?

Even if there are duplicates in df, I would like the output to be the same format. In other words:

df

col1 col2 col3

0 1 4 7

1 2 5 8

2 2 6 8

df_new

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 8

3 2 4 7

4 2 5 8

5 2 6 8

6 2 4 7

7 2 5 8

8 2 6 8

Thanks in advance for any suggestions!

2

6 Answers

6

Reset to default

Highest score (default)

Trending (recent votes count more)

Date modified (newest first)

Date created (oldest first)

I would also have gone for a cross merge as suggested by @Henry in comments:

out = df[['col1']].merge(df[['col2', 'col3']], how='cross').reset_index(drop=True)

Output:

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

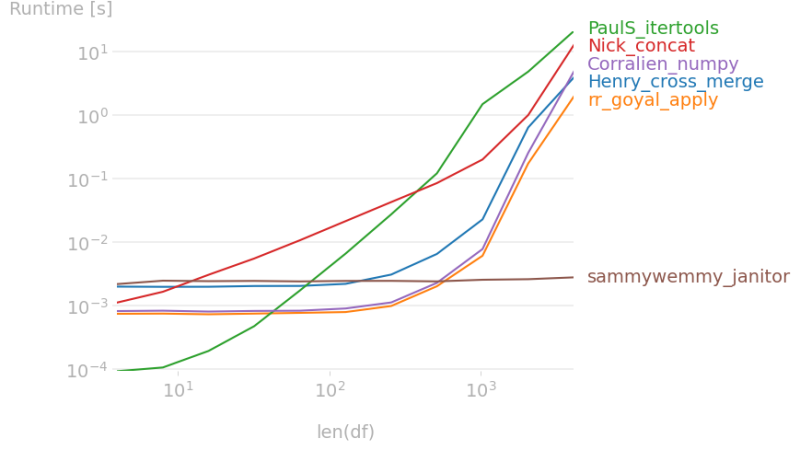

Comparison of the different approaches:

Note that @sammywemmy’s approach behaves differently when rows are duplicated, which leads to a non comparable timing.

11

-

Interesting timing data. It kind of looks like the other solutions might be going to catch mine as length increases…

– Nick2 days ago

-

for the nick_concat solution, I suspect the copies affect the speed (a copy for drop, a copy for assign, a copy for reset_index).

– sammywemmy2 days ago

-

@Nick I added some more rows (it previously crashed on another computer as the combinations are memory expensive). Looks like they converge with still a difference

– mozway2 days ago

-

1

@sammywemmy regarding your approach, it doesn't provide the same output as the others when there are duplicated rows (thus the different timing)

– mozway2 days ago

-

1

@mozway Cool stuff. Interesting to see itertools as the slowest option. I love questions that get such a variety of responses and someone doing the timing.

– Nick2 days ago

I would love to see a more pythonic or a ‘pandas-exclusive’ answer, but this one also works good!

import pandas as pd

import numpy as np

n=3

df = pd.DataFrame({'col1': [1, 2, 3],

'col2': [4, 5, 6],

'col3': [7, 8, 9]})

# Edited and added this new method.

df2 = pd.DataFrame({df.columns[0]:np.repeat(df['col1'].values, n)})

df2[df.columns[1:]] = df.iloc[:,1:].apply(lambda x: np.tile(x, n))

""" Old method.

for col in df.columns[1:]:

df2[col] = np.tile(df[col].values, n)

"""

print(df2)

You could concatenate copies of the dataframe, with col1 replaced in each copy by each of the values in col1:

out = df.drop('col1', axis=1)

out = pd.concat([out.assign(col1=c1) for c1 in df['col1']]).reset_index(drop=True)

Output:

col2 col3 col1

0 4 7 1

1 5 8 1

2 6 9 1

3 4 7 2

4 5 8 2

5 6 9 2

6 4 7 3

7 5 8 3

8 6 9 3

If you prefer, you can then re-order the columns back to the original using

out = out[['col1', 'col2', 'col3']]

You can use np.repeat and np.tile to get the expected output:

import numpy as np

N = 3

cols_to_repeat = ['col1'] # 1, 1, 1, 2, 2, 2

cols_to_tile = ['col2', 'col3'] # 1, 2, 1, 2, 1, 2

data = np.concatenate([np.tile(df[cols_to_tile].values.T, N).T,

np.repeat(df[cols_to_repeat].values, N, axis=0)], axis=1)

out = pd.DataFrame(data, columns=cols_to_tile + cols_to_repeat)[df.columns]

Output:

>>> out

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

You can create a generic function:

def repeat(df: pd.DataFrame, to_repeat: list[str], to_tile: list[str]=None) -> pd.DataFrame:

to_tile = to_tile if to_tile else df.columns.difference(to_repeat).tolist()

assert df.columns.difference(to_repeat + to_tile).empty, "all columns should be repeated or tiled"

data = np.concatenate([np.tile(df[to_tile].values.T, N).T,

np.repeat(df[to_repeat].values, N, axis=0)], axis=1)

return pd.DataFrame(data, columns=to_tile + to_repeat)[df.columns]

repeat(df, ['col1'])

Usage:

>>> repeat(df, ['col1'])

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

0

Another possible solution, which is based on itertools.product:

from itertools import product

pd.DataFrame([[x, y[0], y[1]] for x, y in

product(df['col1'], zip(df['col2'], df['col3']))],

columns=df.columns)

Output:

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

One option is with complete from pyjanitor:

# pip install pyjanitor

import janitor

import pandas as pd

df.complete('col1', ('col2','col3'))

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 9

3 2 4 7

4 2 5 8

5 2 6 9

6 3 4 7

7 3 5 8

8 3 6 9

complete primarily is for exposing missing rows – the output above just happens to be a nice side effect. A more appropriate, albeit quite verbose option is expand_grid:

# pip install pyjanitor

import janitor as jn

import pandas as pd

others = {'df1':df.col1, 'df2':df[['col2','col3']]}

jn.expand_grid(others=others).droplevel(axis=1,level=0)

col1 col2 col3

0 1 4 7

1 1 5 8

2 1 6 8

3 2 4 7

4 2 5 8

5 2 6 8

6 2 4 7

7 2 5 8

8 2 6 8

1

-

1

It's nice and short for the non-duplicates case but unfortunately doesn't handle the other case correctly.

– mozway2 days ago

Your Answer

Post as a guest

Required, but never shown

Post as a guest

Required, but never shown

By clicking “Post Your Answer”, you agree to our terms of service and acknowledge that you have read and understand our privacy policy and code of conduct.

Not the answer you're looking for? Browse other questions tagged

or ask your own question.

or ask your own question.

Can you include some more details about your scaling logic? It looks like you're taking the Cartesian product between col1 and the rest of the columns which would just be a standard cross join between col1 and [col2, col3] which would be significantly faster than manual scaling. Something like

df[['col1']].merge(df[['col2', 'col3']], how='cross').reset_index(drop=True)Is this correct or do you actually just need to scale your DataFrame a fixed number of times based on column values or otherwise?♦

2 days ago

@HenryEcker. Great use of

how='cross'! It could also beto_tile=['col1']; df[to_tile].merge(df[df.columns.difference(to_tile)], how='cross').reset_index(drop=True)2 days ago

|